The internet has supercharged the spread of misinformation, Brunswick’s Barney Southin and Louise Ward explain. What’s next?

In April 2013, with tensions on the Korean peninsula once again simmering, 40,000 residents of the coastal Japanese city of Yokohama received a worrying message via Twitter from the city’s crisis control room, which read: “North Korea has launched a missile,” the clear implication being that it was headed straight for the city.

Thankfully, the tweet proved to be a false alarm. Having removed the message from Twitter, a red-faced city official later told journalists: “We had the tweet ready and waiting [for such a situation], but for an unknown reason it was dispatched erroneously.” Better the message than the missile, but it is easy to imagine how the reaction could have been a lot worse.

The Yokohama tweet was just one of a string of recent instances of “digital misinformation,” the unintentional spread of false or inaccurate information on the internet. As with Yokohama, many were caused by trigger-happy tweeters.

In the past two years, such internet bloopers and propaganda have caused a stir in many countries, including the US, the UK, China, Russia, Mexico and India. With the global online population predicted to increase from 2.4 billion to 3.6 billion by 2017 (almost half the world’s population), such instances will inevitably become commonplace.

The risks from this trend are worrying for governments and businesses. In its Global Risks 2013 report, the World Economic Forum flagged digital misinformation for the first time, warning that “a false rumor spreading virally through social networks could have a devastating impact before being effectively corrected.” Businesses are equally alarmed, as a 2013 Deloitte report (Exploring Strategic Risk) showed, with most of the senior executives surveyed citing the risk of damage to reputation as the biggest immediate threat to their businesses, rising from the third-biggest concern in a similar survey in 2010.

HOW SERIOUS A RISK is the spread of false information via the internet, and what can be done to mitigate it?

The threat of mass hysteria from misinformation is, of course, nothing new. One famous example dates from 1938, when Orson Welles’s radio adaptation of H.G. Wells’s novel The War of The Worlds started so convincingly that it caused widespread panic among Americans tricked into believing their country was actually being invaded by Martians.

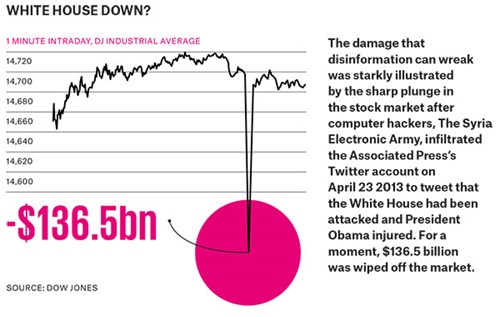

The worry now is that the internet has supercharged the threat because of the speed of communication and increased global connectivity. A recent warning of the potential for damage came in spring 2013, when a false tweet claiming that the White House had been attacked and President Obama injured, was sent out from the Associated Press Twitter account. It temporarily wiped billions of dollars off the value of shares before a correction could be made. The hacking group The Syria Electronic Army later claimed responsibility.

The “prophet of mass media,” philosopher Marshall McLuhan, wrote in the 1960s about how the nature of modern mass media meant that the way people interact with it had become more important than the content itself – “the medium is the message,” as he said.

With the internet and social media, where information is transmitted and re-transmitted almost perpetually in real-time, McLuhan’s concept has become even more apt and interest in his ideas has been rekindled. Furthermore, digital media has exacerbated the human instinct to believe bad news over good news, or false information that reinforces prejudices over true information that contradicts them. This can be seen at work in a number of recent examples.

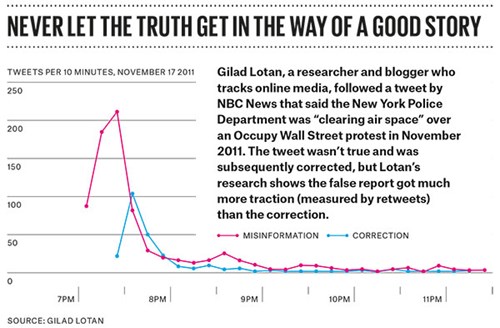

At a Harvard University conference in 2012 (“Truthiness in Digital Media”), Gilad Lotan, Chief Data Scientist at tech incubator Betaworks, presented a compelling case study of the stickiness of false information when it fits the zeitgeist. He tracked an erroneous tweet by the New York bureau of NBC News that claimed that the New York Police Department (NYPD) moved NBC’s helicopter away from Occupy Wall Street (OWS) protests and were “closing air space” in the area.

The tweet was broadcast on November 17 2011, the “global day of action” organized by OWS, when tension between the police and protestors was running high after police evictions from a downtown park. “The environment was incredibly heated and everyone expected violence to erupt,” Lotan recalled.

Within five minutes of NBC’s tweet, it was reposted by a number of reporters from other news organizations, including The New York Times, and these in turn were retweeted multiple times, with angry messages appended such as “this is what democracy looks like.”

Within five minutes, the NYPD tweeted “NYPD has never closed airspace and it is not our authority to do so,” and NBC quickly issued a correction. But the original false story had already taken on a life of its own and the correction never got anything like the traction that the false report did.

“People are much more likely to retweet what they want to be true, their aspirations and values,” Lotan says. “Does misinformation always spread further than the correction? Not necessarily ... But I can safely say that the more sensationalized a story, the more likely it is to travel far.”

A false report that resists correction is bad enough for a government or company, but it can have long-lasting consequences for an individual. One high-profile victim was the late British politician Lord McAlpine who, following a BBC television program in November 2012, was mistakenly accused of wrongdoing in around 10,000 speculative tweets and retweets. He won substantial damages from the media, including the BBC, which paid £185,000 ($300,000), and high-profile tweeters such as the House of Commons Speaker’s wife, Sally Bercow, who later tweeted: “I hope others have learned tweeting can inflict real harm on people’s lives.” Despite public acknowledgment that he was completely innocent, together with apologies and damages, this example shows that deleting even demonstrably false information from the internet is extremely difficult, if not impossible.

ONE RESULT of this supercharged misinformation flow has been a backlash, with some ironic twists. Even though it is the newer, unfettered media that have facilitated the spread of misinformation, the public trust in traditional media continues to decline. A Pew report in 2012 found that only 56 percent of Americans believe what they read in “legacy” media – the highest level of distrust since the survey began in 2002. Sorting online truth from fiction is getting harder as satirical news sites such as The Onion have absurdist “news” items (“Consumers Required to Seek Treasury Department Approval For Purchases Over $50”) taken as genuine by some gullible readers, and even serious news outlets.

In China, spreading “false rumors” can now result in up to three years in prison, though the West has mostly resisted efforts to curb free speech through the courts. There are some signs of self-correction. LazyTruth and Truthy, which are websites specifically designed to help internet users assess the credibility of online information, join a bevy of well-established debunking sites, such as Factcheck.org and Snopes.com.

A recent hoax suggests the public is becoming more skeptical. A fake tweet claiming the ex-director of the National Security Agency had been shot dead at Los Angeles International Airport was widely circulated, but unlike the earlier example of the NBC New York tweet, the number of online corrections quickly surpassed the initial piece of misinformation.

It will be interesting to see whether “truthiness” continues to gain ground on rumor and disinformation.

FIRST, DO NO HARM

Prevention is the best policy in managing the risk of misinformation

There are essentially two scenarios for companies facing the threat of misinformation via the internet: internal and external. Within a company, the threat may come from a disgruntled employee or technical failure. External threats can come from anywhere and strike at any time.

Prevention is best, of course, because the damage – especially from misinformation put out via an official channel, such as a company Twitter account – can be extremely hard to contain and correct.

Establishing good internal social media governance and training can do much to reduce the risk. This includes having defined roles and responsibilities; guidance on appropriate content, tone, and language; and a clearly defined purpose for each channel, including a social media task force in times of crisis.

Companies have found that a blanket ban on personal use of social media in office hours or on work devices is not a good idea. Not only are bans onerous to enforce, but in some countries, including the US, they can violate employment law.

For external misinformation scenarios, monitoring conversations across social media – not confined to Twitter and Facebook – is important: done effectively it gives a company the ability to detect misinformation early. Human involvement is key to this, for while digital monitoring tools are good at collecting data they remain poor at analyzing it.

Companies need to watch their online “influencers:” the people and organizations with the power to shape their reputation on the internet. Once misinformation starts to spread, and is picked up and repeated by these influencers, it can be very difficult to change the narrative.

On the other hand, these influencers can be powerful allies in stopping the spread of misinformation if companies have invested time and resources in identifying and strengthening relationships with them. For example, issuing a company statement or correction won’t gain traction unless it is validated by third parties with loud megaphones.

If that wasn’t enough to worry about, there is a further threat to companies, of false information being spread with malicious intent through digital media – “disinformation,” which includes hacking and hoaxes.

Companies, like sailors, can forecast stormy weather or take heed of the tides to prepare for a safe journey. But at the end of the day, social media will ebb and flow at its own pace.

This article is also available in Chinese. Click here to download the PDF.

BARNEY SOUTHIN is an Associate in Brunswick’s London office. He specializes in digital and social media communication. LOUISE WARD is an Account Director in Brunswick’s New York office.